The importance of QA ahead of an AB Test

Quality Assurance (QA) is the last step before going live with your AB test. We cannot stress the importance of thorough QA to make sure that everything is working as it should. We need to be certain that the changes will look and work as expected . The best way to ensure that you run high quality , error free and reliable tests is through a QA process.

QA focus areas ahead of an A/B test

While QAing, you need to consider several aspects of an experiment to ensure a positive user experience and reliable test results. We would recommend having a templated QA sheet that you can use for each test which contains details of all the test cases to carry out. Don’t worry if you don’t have one, we're here to help by highlighting the seven essential areas you should cover.

Quality Assurance of design in CRO experiments

Design is the first point of contact for the user so it is crucial to leave a positive impression. Any discrepancies or inconsistencies in the design can deter users, leading to exits and potentially impacting conversion rate.

To mitigate these risks, some checks to consider are:

- Does the experience match the design specs?

- Does the text, fonts and colours match the design?

- Is the alignment of text, images, components as expected?

- Is the design effective across different breakpoints, devices & browsers? We recommend using your analytics data to determine the most important devices and browsers to focus on based on your website users.

Quality Assurance of functionality in CRO experiments

You need to pay extra attention on this check because ensuring components function as expected is paramount. Glitches or malfunctions can disrupt the user journey and lead to abandonment and frustration.

- Does the component interact as expected? Eg. buttons, forms, navigation or any other interactive elements.

- Does it work in false scenarios? Understand how the experiment will behave in unexpected scenarios and conditions.

- How does it handle unsuccessful actions? consider error messaging and guidance for users in the case of errors.

Quality Assurance of tracking in CRO experiments

Ensuring tracking is firing as expected is crucial to ensure that we can accurately measure the impact of the CRO experiment and provide insights into user behaviour and conversion rate performance.

- Does the test require any additional tracking codes? eg. custom integrations or tracking.

- Is additional functionality or design changes disrupting any existing tracking? eg. if tracking currently fires based on element selectors, ensure this still fires with the new changes.

- Does the dataLayer fire as expected? Check the existing or any additional dataLayer pushes fire as expected and in the right structure.

Quality Assurance of targeting conditions in CRO experiments

Tests need to be shown to the right audiences, at the right time and on the right pages.

- Is the test targeted to correct device type?

- Is the test targeted to the correct URL?

- Is the test targeted to the correct audience conditions?

Any inaccuracies or misconfigurations in targeting conditions can incorrectly include or exclude users from the experiment, resulting in incorrect results and conclusions.

Quality Assurance of traffic allocation in CRO experiments

Ensuring we include the appropriate amount of traffic in the test and also within each variation is important for drawing meaningful insights and reaching the appropriate sample size levels.

- Is the correct proportion of traffic allocated to the experiment?

- Is the traffic to the variation(s) distributed correctly?

Quality Assurance of goals in CRO experiments

Defining the right primary and secondary goals/objectives is the foundation to being able to measure the outcome of the test.

- Does the primary goal reflect the appropriate user action?

- Have secondary goals been configured?

By defining clear primary and secondary goals that align with business objectives and user expectation, we can effectively measure and optimise user experiences.

Quality Assurance of integrations in CRO experiments

Almost all of the testing tool(s) have the ability to integrate with others tools so that you can analyse the data in multiple places. Some common examples are Google Analytics , Mixpanel , Adobe Analytics , HotJar and Contentsquare . When you’re running a campaign make sure you have the relevant integrations enabled and configure to collect data smoothly and accurately across platforms.

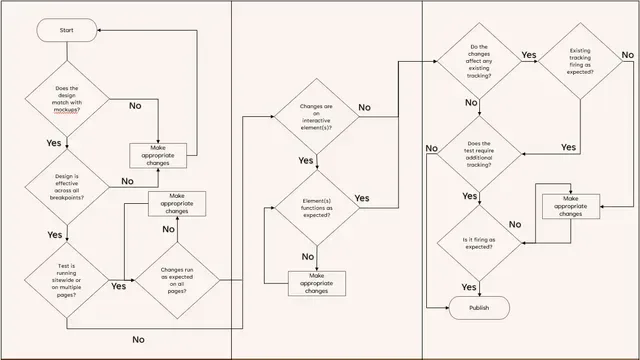

Here's an example of how the whole flow could fit together and the process that would be carried out:

Methods for QAing your website experiment

There are several methods to QA your campaign, it can vary from tool to tool but you can follow any of these depends how much technical background you have or hands-on experience on the testing tool.

QA Assistants/QA preview links

Most testing tools offer dedicated applications known as 'QA Assistants' or provide QA preview links. These allow QA teams to thoroughly evaluate all of the checks we've outlines previously without pushing the changes live to your users. It allows you to easily select which variation you want to 'force' into and carry out the relevant checks.

Cookie-based QA

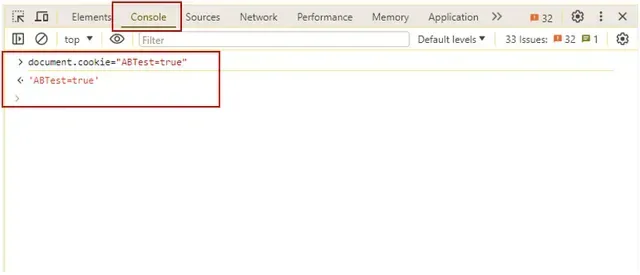

With this approach, we will push the test live but add an additional targeting condition to the test where it will check that a certain cookie has been set on the browser. This is a good was for us to truly test the experience as it where live, especially if we see unexpected behaviour with the QA links or QA assistants. Those who have this cookie value in their browser, can only see the experiment. So that you can see the changes, you will have to manually drop the cookie using the browser developer tools.

To do this, you can follow these steps:

- Open your developer tools using Ctrl + Shift + I (or right click & select 'inspect')

- Navigate to the console tab

- Type document.cookie="ABTest=true"

- Click enter

QAing with Query Parameters

Similar to QAing with a cookie, with query parameters we will change the targeting conditions of the test to look for a specific value in the URL that a normal user would not have set. For example:

www.exampledomain.com ?abtestparam=value

Be careful, if it is another parameter already in the URL, you will need to make sure you add an ampersand:

www.exampledomain.com?param1=value1 &abtestparam=value2

IP Address QA

This methods is easy to setup and doesn’t require much technical knowledge. Look for your IP address and add into the setup and if you want others to see your QA version (eg. in the same office) you can also set an IP range in your tool.

Once done, push your campaign live and you can QA your experiment.

Common QA mistakes

Since this step of building an experiment is crucial, below are some areas that I’ve tried to highlight some common mistakes to avoid.

Only testing using emulators and not on actual device & browsers

There are lots of emulation tools in the market that offers scalability and accessibility and huge variety of operating system along with hardware support. You can easily pick OS and browser of your choice, however it is not the recommended way of QAing your test campaign. And the common reasons are;

- Different vendors uses hardware support for emulation from different manufacturers, which in result can create mismatch between hardware and software.

- Emulators run on specific requirements because of their limited computational power and memory usage, so when you load emulator on your machine you may struggle to experience what user would experience in real-environment.

We recommend trying to avoid emulators for your CRO experiment QA and use actual devices.

Testing on a single browser

If your campaign is running smoothly on one browser, it doesn’t mean it will behave same on another. For example, there are some CSS rules that are not supported cross browser. Use analytics data to check which browsers are most popular for your users, although all browsers should be considered.

Testing on a single device type

If your campaign is running seamlessly on desktop it doesn’t mean it will behave same on mobile too. Your test should be device friendly, from both design and functionality perspective.

You may also be interested in